Ruth Rosenholtz

The Visual System as Statistician

In studying the workings of the visual system, we are often faced with quite complicated and seemingly messy empirical results from behavioral experiments. For instance, it is easier (against a neutral gray background) to search for a red target among pink distracting element, than vice versa. Is this because the brain has detectors that respond strongly to red and not pink, but no detectors that respond more strongly to pink than red? One of our key tasks as researchers is to ask whether the data necessitate an explanation like this, in which special status is given to certain features/operations/etc. Or is there a simpler explanation? Is the observed behavior in some sense ideal? Questions of this sort often lead us to examine statistical models of human behavior.

Much of the work in our lab has been aimed at testing the hypothesis that the visual system, in many instances, has an interest in operating as a statistician. In other words, the visual system samples (noisy) feature estimates from the visual input, in many cases computes summary statistics such as the mean and variance of those features, and makes intelligent decisions based upon those statistics.

Models of this form have proven quite powerful in predicting behavior at visual tasks, particularly when processing needs to be fast or “pre-attentive,” or when the stimulus is viewed in the periphery. For example, many of the existing results in visual search can be qualitatively predicted by a model that extracts the mean and covariance of basic features like motion, color, and orientation.

Pre-attentive texture segmentation, on the other hand, is well modeled by a process that takes a sample of features from each side of a hypothesized texture boundary, and does the equivalent of a t- and F-test to see if the textures differ significantly in their statistics. If so, the observer is likely to perceive a boundary.

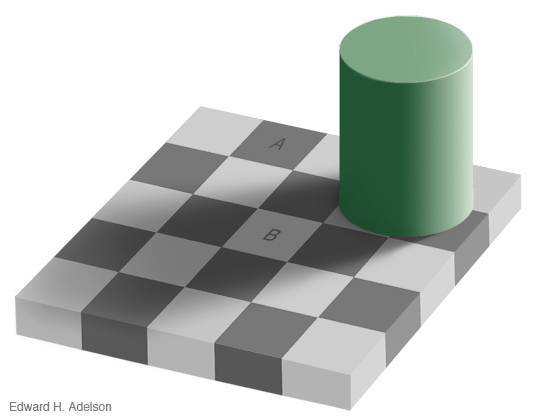

More recent work in our lab suggests that one can predict the difficulty of doing a task in peripheral vision (i.e. under conditions of crowding) with a model that represents peripheral stimuli not through the exact configuration of their parts, but rather through the joint statistics of responses of V1-like receptive fields. This model shows promise at being able to predict a wide variety of visual phenomena, from optical illusions through predicting search reaction time for arbitrary search displays.

There are various benefits to this approach to studying vision. First and foremost, the resulting models often work quite well. They often have fewer parameters than neurally inspired models (which often, when analyzed, turn out to be implicitly performing similar calculations). Statistical models are often surprisingly easy to implement in biologically inspired hardware, and similarly easy to implement in computer vision algorithms which can then make predictions for arbitrarily complex natural images.

See the new FAQ here.====

Bibliography

- Beyond Bouma’s window: How to explain global aspects of crowding?A. Doerig, A. Bornet, R. Rosenholtz, G. Francis, A. M. Clarke, M. H. Herzog, PLOS Computational Biology, 2019. Abstract PDF

- Context mitigates crowding: Peripheral object recognition in real-world imagesWijntjes, M. W. A. & Rosenholtz, R., Cognition, 2018. Abstract PDF

- Capacity limits and how the visual system copes with themRosenholtz, R., HVEI, 2017. Abstract PDF

- Those pernicious itemsR. Rosenholtz, Brain & Behavioral Sciences, 2017. Abstract PDF

- A general account of peripheral encoding also predicts scene perception performanceEhinger, K. A., Rosenholtz, R., Journal of Vision, 2016. Abstract PDF

- Capabilities and limitations of peripheral visionRosenholtz, R., Annual Review of Vision Science, 2016. Abstract PDF

- Search performance is better predicted by tileability than by the presence of a unique basic featureChang, H., Rosenholtz, R., Journal of Vision, 2016. Abstract PDF

- Cube search, revisitedX. Zhang, J. Huang, S. Yigit-Elliott, and R. Rosenholtz, Journal of Vision, 2015. AbstractPDF

- Texture PerceptionR. Rosenholtz, Handbook of Perceptual Organization, 2014. Abstract PDF

- Rethinking the role of top-down attention in vision: Effects attributable to a lossy representation in peripheral visionRuth Rosenholtz, Jie Huang, and Krista A. Ehinger, Front. Psych., 2012. AbstractPDF

- A summary statistic representation in peripheral vision explains visual searchR. Rosenholtz, J. Huang, A. Raj, B. J. Balas, and L. Ilie, Journal of Vision, 2012.Abstract PDF

- What your visual system sees where you are not lookingRuth Rosenholtz, Proc. SPIE: HVEI, 2011. Abstract PDF

- What Your Design Looks Like to Peripheral VisionAlvin Raj, Ruth Rosenholtz, APGV, 2010. Abstract PDF

- A summary-statistic representation in peripheral vision explains visual crowdingB. Balas, L. Nakano, and R. Rosenholtz, Journal of Vision, 2009. Abstract PDF

- Uniform versus random orientation in fading and filling-inC. H. Attar, K. Hamburger, R. Rosenholtz, H. Gotzl, & L. Spillman, Vision Research, 2007.Abstract PDF

- The effect of background color on asymmetries in color searchR. Rosenholtz, A.L. Nagy, N. R. Bell, Journal of Vision, 2004. Abstract PDF

- Search asymmetries? What search asymmetries?R. Rosenholtz, Perception & Psychophysics, 2001. PDF

- General-purpose localization of textured image regionsR. Rosenholtz, NIPS, 1999. Abstract PDF

- A simple saliency model predicts a number of motion popout phenomenaR. Rosenholtz, Vision Research, 1999. Abstract PDF